The Sophos UTM firewall is a great piece of security software. It is designed with businesses in mind but is also free for home use. It has many features, two of which (User Portal and Web Application Firewall) compete for the same port – TCP 443 (https.) This is a shame if you want to run both services simultaneously but only have one IP address.

For some reason the folks at Astaro (Sophos) have not engineered a way to allow the WAF and User Portal to play nicely, saying on their forums to just configure them to use different ports. What if you have people who are behind firewalls that only allow ports 80 and 443? You are stuck.

I didn’t like that answer so I set out to research a way around this. The solution to this problem lies with Apache and its reverse proxy feature. A reverse proxy is a webserver that receives HTTP requests and forwards them to some other location, then returns the response.

My solution to the “I want both WAF and User Portal to use the same port” problem is to put the user portal on a different, internal-only port, spin up a small apache server, configure it to forward all requests to the user portal address:port combination, and add it as a real server in the sophos WAF.

Change user portal port

Easy enough: Go to Management / User Portal / Advanced tab, scroll down to the “Network Settings” section and pick a different port, then click apply.

Spin up a reverse proxy web server

I went with Ubuntu Server 14.04 so I could have newer software packages.

- Install apache

sudo apt-get install apache2

- Enable needed modules

sudo a2enmod ssl

sudo a2enmod proxy

sudo a2enmod proxy_http

- Configure apache to proxy all requests to your user portal

#Add the following to default-ssl.conf

sudo vim /etc/apache2/sites-enabled/default-ssl.conf

SSLProxyEngine On

#Enable the next 3 lines if you want to ignore certificate errors

#SSLProxyVerify none

#SSLProxyCheckPeerCN off

#SSLProxyCheckPeerName off

#Configure the reverse proxy to forward all requests

ProxyPass / https://<your firewall IP>:<port you chose earlier>/

ProxyPassReverse / https://<your firewall IP>:<port you chose earlier>/

#Make sure slashes are at the end (important)

- Restart apache

sudo service apache2 reload

Add your reverse proxy to Sophos UTM

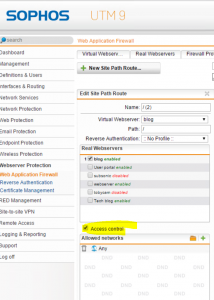

- Add your proxy server as a real webserver. Go to Webserver protection / Web Application Firewall / Real Webservers and add your proxy server address. Make sure the type is “Encrypted HTTPS” (important.)

- Add your desired URL as a virtual server and point to your proxy real server (Virtual Webservers tab.) You’ll have to have an SSL certificate generated, which is beyond the scope of this post.

Caveats

The above configuration will work with every function of the User Portal.. except for the HTML5 VPN gateway. For some inexplicable reason it has scripts hard coded to use the root directory, which Apache won’t proxy properly even if you have rewrite rules in place. I fiddled with this for hours before I finally gave up and looked elsewhere for an HTML5 VPN solution.

It’s more than just dip, it’s an excellent open source HTML5 RDP/VNC/SSH gateway. Unlinke Sophos’s option, guacamole properly handles being in a subdirectory. Unfortunately it is very frustrating and user un-friendly to configure. I decided just to use a pre-configured VM appliance from Green Reed Technology. It’s an excellent appliance and “just works” – a much better experience than wrestling with archaic configuration files. You can get it from here.